Talk:Unicode/Archive 6

| This is an archive of past discussions. Do not edit the contents of this page. If you wish to start a new discussion or revive an old one, please do so on the current talk page. |

| Archive 1 | ← | Archive 4 | Archive 5 | Archive 6 | Archive 7 |

Use of 16 bits

Twice, "It was later discovered that 16 bits allowed for far more characters than originally hypothesized. This breakthrough[...]" has been added to the article. It's wrong; that same escape mechanism could have been created by any programmer since the 1950s. It was political will, not any new discovery or breakthrough that created that additional character space.--Prosfilaes (talk) 23:25, 15 June 2009 (UTC)

- Well, with Joe Becker's original plan there would have been no room for Egyptian Hieroglyphs, Tangut, Old Hanzi, etc. regardless of political will, so the invention of the surrogate mechanism did make it practically possible to encode such scripts. Of course, as you say, there still needs to be the political will to encode historic scripts, and that is something that Joe Becker and the other founding fathers of Unicode probably did not anticipate. BabelStone (talk) 11:45, 16 June 2009 (UTC)

codepoint-layout graphics on offer

I dropped in a graphic showing the layout of the Unicode planes that I think might be newbie-friendly, just above the table. I'm not expert enough an editor to know all the correct incantations for making it appear at just the right optimal width.

I also have uploaded a graphic of the BMP layout at  which perhaps could be worked into the BMP article, where there's already a graphic but this one is maybe a little prettier. Both of them are created in Apple's "Keynote" presentation tool, and I'm volunteering to do two things:

which perhaps could be worked into the BMP article, where there's already a graphic but this one is maybe a little prettier. Both of them are created in Apple's "Keynote" presentation tool, and I'm volunteering to do two things:

- Edit them to bring up to date with the latest/greatest Unicode revs

- upload the source format so that other people can take care of #1 in case I become evil or die; .key is not exactly an open format but it's editable on many computers out there. Is this a reasonable thing to think of doing? Tim Bray (talk) 07:03, 21 August 2009 (UTC)

- Re 1: Note that the picture File:Unicode Codespace Layout.png already is outdated. The latest version of the Unicode standard is 5.1.0, and it contains 100,713 characters.

- I don't think it is helpful to have an out-of-date graphic, especially as it is so in-your-face. I suggest removing it for now, and putting it back when it has been updated to Unicode 5.1. But be aware that 5.2 is due to be released at the end of September, so it may be best to wait a few weeks, and update a 5.2 friendly version once 5.2 has been released. BabelStone (talk) 12:30, 21 August 2009 (UTC)

- Oh, and the labels on the graphic are really bad -- the SMP is not just "dead languages and math", and the SSP is not just "language tags". I strongly suggest changing the labels to simply use the plane names. Anyway, I am going to remove the graphic until it has been fixed to correspond to the current version of Unicode and has suitable labels. BabelStone (talk) 12:36, 21 August 2009 (UTC)

- Yes, I think that's a good idea. But you should still be aware that Plane 3 (provisionally named the "Tertiary Ideographic Plane") is not yet live, but will be defined in Unicode 6.0 (next year) ... although after that the top-level allocation of code space should be stable for our lifetime (famous last words). BabelStone (talk) 22:48, 21 August 2009 (UTC)

..."which uses Unicode as the sole internal character encoding"

i think this needs re-wording - in strict terms, "Unicode" is not an encoding. —Preceding unsigned comment added by 194.106.126.97 (talk) 08:13, 29 September 2009 (UTC)

Classical Greek version of Cyrillic

Where is the uppercase circumflex Omega in the Cyrillic alphabet? Does it even exist? If you turn the circumflex character on its side, it might look a lot like the rough or soft breathing mark, but that is not the same thing as a true circumflex. The uppercase circumflex Omega is not used in Modern Greek, I guess. 216.99.201.190 (talk) 19:26, 25 October 2009 (UTC)

Who does need to understand the importance of unicode?

this link should be inserted somewhere:

- [1] —Preceding unsigned comment added by 80.98.135.63 (talk) 10:11, 18 January 2010 (UTC)

- Yeah, somewhere, just not on Wikipedia. See WP:ELNO. BabelStone (talk) 10:06, 11 March 2010 (UTC)

Formatting References

I've taken to formatting some of the bare URLs here, using the templates from WP:CT. Omirocksthisworld(Drop a line) 21:25, 16 March 2010 (UTC)

Unicode block names capitalization (Rename and Move)

Here is a proposal to rename Unicode block names into regular (Unicode) casing, e.g. C0 controls and basic Latin be renamed and moved to C0 Controls and Basic Latin. Some 18 block pages are affected. -DePiep (talk) 09:08, 6 October 2010 (UTC)

Categories

Where is the list of categories of unicode characters? I found the arrow category article. I want to see other categories. I go to this article, and no list of categories to be found. Rtdrury (talk) 19:44, 8 February 2011 (UTC)

- As the TOC says, here: Unicode#Character_General_Category. -DePiep (talk) 20:31, 8 February 2011 (UTC)

Unicode is not just text

The lead-in paragraph associates Unicode as something that is just for text, but than is not correct. Consider that there are control code points (e.g., ASCII control codes) and symbols (e.g., dingbats). Also, code points, characters and graphemes are separate things but the lead-in notes that 107,000 characters (not code points nor graphemes) are presently in the standard. I haven't read the rest of the article. —Preceding unsigned comment added by TechTony (talk • contribs) 12:59, 17 July 2010 (UTC)

- "Plain text" by Unicode's terminology though, no? — Preceding unsigned comment added by 193.120.165.70 (talk) 10:22, 29 November 2011 (UTC)

![]() Summary of Unicode character assignments has been nominated for deletion. You are invited to comment on the discussion at the article's entry on the Articles for deletion page.BabelStone (talk) 23:36, 3 February 2012 (UTC)

Summary of Unicode character assignments has been nominated for deletion. You are invited to comment on the discussion at the article's entry on the Articles for deletion page.BabelStone (talk) 23:36, 3 February 2012 (UTC)

AfD notice Mapping of Unicode graphic characters

For deletion. See Wikipedia:Articles for deletion/Mapping of Unicode graphic characters. -DePiep (talk) 00:39, 12 February 2012 (UTC)

![]() Template:UCS characters has been nominated for deletion. You are invited to comment on the discussion at the template's entry on the Templates for discussion page. DePiep (talk) 11:54, 2 February 2012 (UTC)

Template:UCS characters has been nominated for deletion. You are invited to comment on the discussion at the template's entry on the Templates for discussion page. DePiep (talk) 11:54, 2 February 2012 (UTC)

- This template is now being proposed & developed into an infobox. See Template:UCS characters/sandbox and Template talk:UCS characters. Since my communication with my opponent is not clear, I'd like someone else to join in there. I am not yet convinced of the new form it has, but I don't want to throw away a good idea too. -DePiep (talk) 10:12, 17 February 2012 (UTC)

Merger proposal: RLM into LRM

Proposed: merge bidi Right-to-left mark (RLM) into LRM article. See: Talk:Left-to-right mark#Merger proposal: RLM into LRM. -DePiep (talk) 21:36, 28 February 2012 (UTC)

Online tool for creating Unicode block articles

In the English Wikipedia, quite a few articles about Unicode blocks are still missing (see Unicode block). Now I have created an online generator that takes over all the tedious work of typing in character numbers, characters, etc. Just enter the Unicode range and you'll get a wikitable for pasting into a Wikipedia edit window. You can even use templates to add introductory text and external links. Try it out. --Daniel Bunčić (de wiki · talk · en contrib.) 15:15, 6 April 2012 (UTC)

- See Category:Unicode charts. -DePiep (talk) 17:29, 6 April 2012 (UTC)

How are the in each version of the unicode standard counted?

I just tried to verify the number of characters in Unicode 6.2.0 and I got a slightly different number. So where are these from? How are they counted? Simar0at (talk) 17:01, 25 November 2012 (UTC)

- Add up the characters listed in UnicodeData for 6.2 excluding PUA characters and surrogate code points (i.e. all graphic, format and control characters). The result should be 110,182. However, the official Unicode 6.2.0 page gives a count of 110,117 characters -- "That is the traditional count, which totals up graphic and format characters, but omits surrogate code points, ISO control codes, noncharacters, and private-use allocations". This implies that the Wikipedia counts, which include the 65 control characters, are incorrect, and only format and graphic characters should be counted. BabelStone (talk) 18:31, 25 November 2012 (UTC)

- Good catch, Simar0at. re Babelstone: I'd say that Unicode is wrong (inconsistent). The 65 control characters (!) are assigned code points, full stop. (they are the General Category

Cc. UTF-8 uses them. Which internet page does not have a newline control character?). Very tiresome that Unicode consorts invents a different definition for every exception. A bit of toughness on their own principles would save a lot of problem solving time on our side. -DePiep (talk) 18:44, 25 November 2012 (UTC)- I've added a note explaining what the character count for each version represents. I am in favour of excluding control characters from the counts, as the Unicode page linked above specifies that it is traditional to only count graphic and format characters. PUA characters and noncharacters are also assigned code points, but no-one counts them, so I don't follow that argument. The argument for excluding control characters is that they do not have assigned Unicode names, whereas all graphic and format characters do (even if algorithmically generated names in some cases). BabelStone (talk) 19:31, 25 November 2012 (UTC)

- Sort of proving my point. they do not have assigned Unicode names: that is turning it upside down. Their non-naming is because of the control-thing. Unicode names are not that defining, they are using a definition (like script name). Next, PUA and surrogate characters are exempted quite logically (even I can understand that; these are called "abstract characters" - I have no problem with that). (Basics). But the 65 control characters are assigned, and can be used as any other character (newline anyone?). As any other assigned char, they will never change. Yes there is a grouping: Graphic-Formatting-Control, but that does not imply we should leave out one of these three. (and there is always the "format control" and "bidi control" - another great Unicode obfuscation). Noncharacters [sic] and reserved code points are not assigned - by definition. There is no reason to exempt the "control characters" from counting. The most signifying word you write is "traditional". I don't agree, that is not an argument at all. Or is that, really, an Unicode consortium argument? That is why I wrote: "for every exemption another rule". A subdefinition to accomodate every other extreme situation. Babelstone, I am reading your writings with pleasure (sure they are more relaxed and sympathic that what I write), but now arguing "I am in favour of ..." about Unicode is another example of incidental logic. OR even! -DePiep (talk) 20:36, 25 November 2012 (UTC)

- I've added a note explaining what the character count for each version represents. I am in favour of excluding control characters from the counts, as the Unicode page linked above specifies that it is traditional to only count graphic and format characters. PUA characters and noncharacters are also assigned code points, but no-one counts them, so I don't follow that argument. The argument for excluding control characters is that they do not have assigned Unicode names, whereas all graphic and format characters do (even if algorithmically generated names in some cases). BabelStone (talk) 19:31, 25 November 2012 (UTC)

- Good catch, Simar0at. re Babelstone: I'd say that Unicode is wrong (inconsistent). The 65 control characters (!) are assigned code points, full stop. (they are the General Category

Next version of Unicode

User:Vanisaac added speculative information about the next version of Unicode sourced to a private email which I reverted. I am copying below the discussion between myself and Vanisaac on this issue from my talk page. BabelStone (talk) 09:42, 3 December 2012 (UTC)

Of course you can source something to a private correspondence. The information meets all of the criteria for a questionable source:

- the material is neither unduly self-serving nor an exceptional claim - the expected date of publication is not contentious in any way.

- it does not involve claims about third parties - as an officer of the standards committee, he is a first party

- it does not involve claims about events not directly related to the source - the source is directly involved in the event

- there is no reasonable doubt as to its authenticity - if you doubt the information, I can forward the email to you.

- the article is not based primarily on such sources - this is a minor claim about a single section of a large article.

It meets all criteria for a questionable source - it is not a contentious, exceptional, critical, or otherwise spurious; the source has direct knowledge of the event; and the claim is purely informative and is not the basis for larger claims within the article. I've been through this before at the Reliable Sources board when we were documenting Tibetan Braille. However, I've found a PRI that mentions 6.3, so I'll start building it back. VanIsaacWS Vexcontribs 09:08, 3 December 2012 (UTC)

- I disagree -- private correspondence fails the verifiability test. Whatever Rick may say now, there is still time for the UTC to change their mind about whether to call the next version 6.3 or 7.0, and when it will be released, especially as v. 6.2.1 is scheduled for Spring 2013. We're an encyclopedia not a WP:CRYSTALBALL, so we can afford to wait until the UTC has announced what the next version of Unicode is before reporting it. Indeed we owe it to our readers not to report "expections". If you feel strongly then take it to the Unicode talk page. BabelStone (talk) 09:14, 3 December 2012 (UTC)

- I guess the real question is, would you have honestly removed the claim if it had been uncited? There is a threshold of contention, below which inline citations are not necessary. Specifically, does it meet WP:NOCITE? I don't see how there is any way that it would even meet that very basic criteria, and the currently in progress release of a standard really doesn't meet WP:CRYSTALBALL either: it is almost certain to take place, the information is not a claim about the future, but rather about the current planning. VanIsaacWS Vexcontribs 09:27, 3 December 2012 (UTC)

- Note that the latest approved UTC minutes (UTC #131) specifies:

- 6.2: a release to include one character, the Turkish Lira Sign, planned for September 2012

- 6.2.1: optional, with data and UAXes in mid-2013

- 7.0: January 2014 to include characters from amendments 1 and 2

- The two draft UTC minutes (UTC #132 and UTC #133) do not mention the version after 6.2.1 at all, so clearly the UTC has not yet made a decision as to what the version will be called and when it will be released. Rick McGowan is a UTC member, but he is not the UTC, and a private email from him does not represent the UTC and is therefore not sufficiently reliable. BabelStone (talk) 09:28, 3 December 2012 (UTC)

- Of course I would have removed the claim if it was uncited! I am probably closer to the UTC than you, but I honestly do not know whether the next version will be 6.3 or 7.0, or whether it will be released in Summer or Autumn 2013. Of course there will be a new version of Unicode, but is still too far away to report yet. I would have no objections to you adding a statement about the scheduled 6.2.1 release, sourced to UTC #131 minutes. BabelStone (talk) 09:34, 3 December 2012 (UTC)

- Note that the latest approved UTC minutes (UTC #131) specifies:

- I guess the real question is, would you have honestly removed the claim if it had been uncited? There is a threshold of contention, below which inline citations are not necessary. Specifically, does it meet WP:NOCITE? I don't see how there is any way that it would even meet that very basic criteria, and the currently in progress release of a standard really doesn't meet WP:CRYSTALBALL either: it is almost certain to take place, the information is not a claim about the future, but rather about the current planning. VanIsaacWS Vexcontribs 09:27, 3 December 2012 (UTC)

And here it is, folks! http://www.unicode.org/mail-arch/unicode-ml/y2012-m12/0037.html

Feedback requested for Unicode 6.3 Unicode 6.3 is slated to be released in 2013Q3. Now is your opportunity to comment on the contents of this release. The text of the Unicode Standard Annexes (segmentation, normalization, identifiers, etc.) is open for comments and feedback, with proposed update versions posted at UAX Proposed Updates <http://unicode.org/reports/uax-proposed-updates.html>. Initially, the contents of these documents are unchanged: the one exception is UAX #9 (BIDI), which has major revisions in PRI232 <http://www.unicode.org/review/pri232/>. Changes to the text will be rolled in over the next few months, with more significant changes being announced. Feedback is especially useful on the changes in the proposed updates, and should be submitted by mid-January for consideration at the Unicode Technical Committee meeting at the end of January. A later announcement will be sent when the beta versions of the Unicode character properties for 6.3 are available for comment. The only characters planned for this release are a small number of bidi control characters connected with the changes to UAX #9. http://unicode-inc.blogspot.com/2012/12/feedback-requested-for-unicode-63.html

Sincerely, VanIsaacWS Vexcontribs 01:34, 13 December 2012 (UTC)

- Vanisaac, I know you are a good and serious editor. But I do not like this speculation. Also, I do not see a reason to include such information into the encyclopedia. Let Unicode 6.3 take care of itself, probably in 2013. -DePiep (talk) 02:10, 13 December 2012 (UTC)

Misappropriation of the Unicode Consortium Logo

Quote from the Unicode Consortium website: “The Unicode Logo is for the exclusive use of The Unicode Consortium”

The logo shown is not the "Unicode" logo, but the logo of the Unicode Consortium, thus it doesn't belong into this article, but solely into the Unicode Consortium article. Kilian (talk) —Preceding undated comment added 03:09, 19 August 2013 (UTC)

- You are misquoting the legalese out of context. The trademark policy states in full:

The Unicode Logo is for the exclusive use of The Unicode Consortium and may not be used by third parties without written permission or license from the Consortium. The Consortium may grant permission to make use of the Logo as an icon to indicate Unicode functionality—please contact the Consortium for permission. Certain “fair uses” of the Logo may be made without the Consortium’s permission—specifically, the press may make fair use of the Logo in reporting regarding the Consortium and its products and publications.

- This just asserts that the logo is an intelectual property of the Unicode Consortium and its use requires their permission. It doesn’t say that it is the logo of the Unicode Consortium as opposed to Unicode, and in fact, the part about “Unicode functionality” makes it clear that they do intend it to be the logo of the Unicode standard.—Emil J. 13:34, 19 August 2013 (UTC)

Lack of criticism

The article is reading as a panegyric now. There must be criticism present. The most obvious is Unicode data take up more memory and require non-trivial algorithms to process without any practical benefit. 178.49.18.203 (talk) 09:43, 26 September 2013 (UTC)

- Unicode doesn’t take up any memory. It is not an encoding. But if you use UTF-8 for encoding the 2×26 upper and lower case letters of English each letter will require just as much memory as ASCII encoded text: one byte. LiliCharlie 13:16, 26 September 2013 (UTC)

- Except that that's clearly false; the number of Chinese characters people want to use in computers is larger then 216. Supporting that is clearly a practical benefit, and any encoding supporting that is going to run somewhere between UTF-32 (trivial to decode, lots of memory) and SCSU (very complex, approaches legacy encodings in size).--Prosfilaes (talk) 17:46, 26 September 2013 (UTC)

- If you find reliable sources for criticism or even discussions for/against Unicode, feel free to add the material. However, criticism sections are not mandatory. There is none in the Oxygen article for example. --Mlewan (talk) 18:11, 26 September 2013 (UTC)

- You are obviously joking. There must be sources, as files in Unicode format take twice as much size as ANSI ones, and you cannot use simple table lookup algorithms anymore. This information is just waiting for someone speaking English to make it public. 178.49.18.203 (talk) 11:38, 27 September 2013 (UTC)

- I'm sorry, but you are mistaken. You are confusing scalar values with encodings. In Unicode, these are completely different entities. The UTF-8 byte value of F0 90 A8 85 is identical to UTF-16 D802 DE05, which are both encodings of U+10A05. When you get down to things like Z - U+005A, the UTF-8 ends up as a single byte: 5A, taking up exactly as much disk space as its ANSI encoding. The fact that it has a four digit scalar value is irrelevant to how much room it takes on disk. Stateful encodings like BOCU and SCSU can bring this efficiency in data storage to every script, and multi-script documents can actually end up with smaller file sizes than in legacy encodings. VanIsaacWS Vexcontribs 13:34, 27 September 2013 (UTC)

- You are obviously joking. There must be sources, as files in Unicode format take twice as much size as ANSI ones, and you cannot use simple table lookup algorithms anymore. This information is just waiting for someone speaking English to make it public. 178.49.18.203 (talk) 11:38, 27 September 2013 (UTC)

- Stateful encodings are not generally useful. On the other hand, the requirement to represent, let's say, letter А as 1040 instead of some sane value like 192, and implement complex algorithms to make the lookup over 2M characters' size tables possible. And the requirement to use complex algorithms for needs of obscure scripts. It is clearly a demarch to undermine software development in 2nd/3rd world countries, as 1st world ones can simply roundtrip that Unicode hassle with trivial solutions. For the first world, 1 character is always 1 byte, like it always was. 178.49.18.203 (talk) 11:55, 28 September 2013 (UTC)

- 1. There is no “requirement to represent [...] letter А as 1040.” Unicode is not an encoding, or representation of characters.

- 2. I fail to understand the supposed relationship between language and your hierarchy of countries. Is English speaking Liberia 1st world while French speaking Canada isn’t? Is Texas 2nd or 3rd world just because Spanish is one of the official languages? What about Chinese/Cantonese speaking Hong Kong? LiliCharlie 16:25, 28 September 2013 (UTC) — Preceding unsigned comment added by LiliCharlie (talk • contribs)

- @178.49.18.203: Please read Comparison of Unicode encodings and note that Unicode text can be encoded in a number of ways. Besides it is not true that there is no practical benefit, as there would be no Wikipedia without Unicode. LiliCharlie 18:37, 27 September 2013 (UTC) — Preceding unsigned comment added by LiliCharlie (talk • contribs)

- More to the point: You have made a common mistake of using the term "Unicode" to mean what is really called UCS-2, which is a method of placing a 2^16 subset of Unicode into 16-bit code units. This is used on Windows and some other software, all of which mistakenly call it "Unicode". You are correct that UCS-2 is an abomination, and list some of it's problems. But Unicode (the subject of this article) can be stored in 8-bit code units using UTF-8 and many other ways.

- Several times there have been attempts to insert text saying "the term 'Unicode' is often used to indicate the UCS-2 encoding of Unicode, especially by Windows programmers". However this keeps getting removed.Spitzak (talk) 18:46, 27 September 2013 (UTC)

- One small technical correction: UCS-2 is deprecated since Unicode 2.0 for UTF-16. It's an incredibly common mistake, but the UCS-2 specification does not actually have the surrogate mechanism so it does not support and scalar values above U+FFFF, while the UTF-16 specification does. And I object to the characterization of UCS-2/UTF-16 as "abominations". They have done what they were designed to do, which is to provide a functionally fixed width encoding form, but that end has been obsolete for many years. VanIsaacWS Vexcontribs 05:24, 28 September 2013 (UTC)

- Unicode is not a format. Neither is ANSI. You need to understand what you're criticizing first.

- Perhaps some English-only programmers may feel that's for no practical benefit, but everyone else loves the fact that you can deal with the world's languages without worrying about the poorly documented idiosyncrasies of a thousand different character sets. It's easier to use simple table lookup algorithms on Unicode then on older double byte character sets needed for Chinese and Japanese. As I said above, there are more then 216 characters in the world meaning any world character set is going to have these same size problems.--Prosfilaes (talk) 18:53, 27 September 2013 (UTC)

- Hmm. I don’t think it is true that “any world character set is going to have these same size problems,” as a set without precomposed CJKV characters is quite conceivable. The same applies to precomposed Hangeul syllables, precomposed Latin letters with diacritics, and so forth. (If round-trip convertibility is desired, such a character set may require special characters for this purpose.) LiliCharlie 20:01, 27 September 2013 (UTC) — Preceding unsigned comment added by LiliCharlie (talk • contribs)

- Except all you've done is replaced the large number of defined characters, requiring slightly larger code units with a small number of defined characters requiring significantly longer strings of slightly smaller code units. Not rally much of an advantage there.

- That’s why the East Asian delegates kept silent at early Unicode meetings when they were asked if it was possible to decompose Han characters. But again: a character set is not an encoding, and a smart encoding scheme for a “world character set” with decomposed Han characters does not lead to large text files. The trick is that not all Han characters are equally frequent. This is similar to what UTF-8 does with frequent Latin letters, which require less bytes. LiliCharlie 17:46, 28 September 2013 (UTC) — Preceding unsigned comment added by LiliCharlie (talk • contribs)

- Decomposed Hangul and Latin is easy. Chinese is quite a bit harder. For the purposes of the OP, it makes no difference; 216 or 232 characters is all the same to him. The key problem to me is that you've moved Chinese from the easiest script in the world to support (monospace characters with no combining that have a one-to-one relationship to glyphs) to one that involves massive look up tables to support and is going to be less reliable about supporting a consistent set of characters for the end user. We have smart encodings for Unicode, but few use them, since the value isn't worth the extra difficulty in processing.--Prosfilaes (talk) 17:27, 29 September 2013 (UTC)

- Except all you've done is replaced the large number of defined characters, requiring slightly larger code units with a small number of defined characters requiring significantly longer strings of slightly smaller code units. Not rally much of an advantage there.

- Hmm. I don’t think it is true that “any world character set is going to have these same size problems,” as a set without precomposed CJKV characters is quite conceivable. The same applies to precomposed Hangeul syllables, precomposed Latin letters with diacritics, and so forth. (If round-trip convertibility is desired, such a character set may require special characters for this purpose.) LiliCharlie 20:01, 27 September 2013 (UTC) — Preceding unsigned comment added by LiliCharlie (talk • contribs)

- That’s all quite true. However it is also true that in the course of my studies I now and then come across Han characters that are not assigned Unicode code points, and most of them are not even scheduled for inclusion in CJK Extension-E. You can see a few of them on my user page, but there are many more. A more general scheme such as Wénlín’s Character Description Language certainly has advantages for people like me, but there is no way for plain text interchange (yet). LiliCharlie 01:56, 1 October 2013 (UTC)

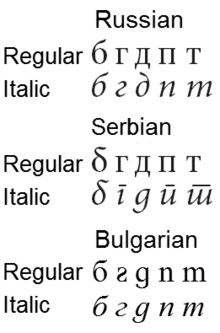

Unicode support for Serbian and Macedonian

Left: “soft dotted” (most languages), right: Lithuanian.

See the image and note that OpenType support is still very weak (Firefox, LibreOffice, Adobe's software and that's it, practically). Also, there are Serbian/Macedonian cyrillic vowels with accents (total: 7 types × 6 possible letters = 42 combinations) where majority of them don't exist precomposed, and is impossible to enter them. A lot of nowadays' fonts still have issues with accents.

In Unicode, Latin scripts are always favored, which is simply not fair to the rest of the world. They have space to put glyphs for dominoes, a lot of dead languages etc. but they don't have space for real-world issues. I want Unicode organization to change their politics and pay attention to small countries like Serbia and Macedonia. We have real-world problems. Thank you. --Крушевљанин Иван (talk) 23:47, 13 February 2014 (UTC)

- This is simply not true. No matter if the script is Latin, Cyrillic or other, Unicode encodes precomposed letters only for roundtrip compatibility with national standards that were in existence before Unicode was introduced.

- Besides, glyphic differences like the ones shown in the image you posted on the left also exist among languages using Latin, see my illustration for Lithuanian on the right. LiliCharlie (talk) 03:39, 14 February 2014 (UTC)

- Hey, I notice user Vanisaac deletes my contributions here and on article page! Whatever the reason he has, until we get correct glyphs and reserved codepoints, I repeat that Unicode is still anti-Serbian and anti-Macedonian organization! --Крушевљанин Иван (talk) 09:20, 14 February 2014 (UTC)

- Unicode support is never enough; OpenType is necessary for most of the scripts in the world that don't have the distinct separate characters of Latin, Cyrillic, Chinese, etc. You may repeat whatever you want, but your biases and what you want Unicode to do are not relevant to this page. It's not a matter of space; it's a matter of the fact that disunifying characters, by adding precomposed characters or by separating Serbian and Russian characters has a huge cost; the first would open up or exacerbate security holes, and the second would invalidate every bit of Serbian text stored in Unicode.--Prosfilaes (talk) 09:58, 14 February 2014 (UTC)

- Biases? Real-world problem, I say. Invalidate? No, because real Serbian/Macedonian support still doesn't exist! And we can develop converters in the future, so I don't see any "huge cost" problems. Security holes? What security holes? Anyway, thank you for the reply. --Крушевљанин Иван (talk) 10:14, 14 February 2014 (UTC)

- Unicode support is never enough; OpenType is necessary for most of the scripts in the world that don't have the distinct separate characters of Latin, Cyrillic, Chinese, etc. You may repeat whatever you want, but your biases and what you want Unicode to do are not relevant to this page. It's not a matter of space; it's a matter of the fact that disunifying characters, by adding precomposed characters or by separating Serbian and Russian characters has a huge cost; the first would open up or exacerbate security holes, and the second would invalidate every bit of Serbian text stored in Unicode.--Prosfilaes (talk) 09:58, 14 February 2014 (UTC)

- Hey, I notice user Vanisaac deletes my contributions here and on article page! Whatever the reason he has, until we get correct glyphs and reserved codepoints, I repeat that Unicode is still anti-Serbian and anti-Macedonian organization! --Крушевљанин Иван (talk) 09:20, 14 February 2014 (UTC)

- Whether or not Unicode has any problems with one or more languages, Wikipedia is not the place to discuss them.

- Your contributions are welcome if they’re neutral (which calling Unicode

anti-Serbian and anti-Macedonian

is not) and properly sourced (which this one is not.) Otherwise, please consider contributing to some other project (the Web is full of them!) which does not impose such restrictions on its participants.

- Your contributions are welcome if they’re neutral (which calling Unicode

- (You may choose to propose changes to the rules instead, but I doubt that the changes to the Five pillars are likely to gain any considerable community support.)

- Thanks in advance for your consideration.

- — Ivan Shmakov (d ▞ c) 10:35, 14 February 2014 (UTC)

- Крушевљанин Иван is right. I looked at Serbian Cyrillic alphabet#Differences from other Cyrillic alphabets. The article says: "Since Unicode still doesn't provide the required difference, OpenType locl (locale) support must be present". So: Unicode does not provide the alternative glyph identity! (In other words: Unicode gives one code point for two different glyphs; it is left to the computer setting to choose, not possible by changing a Unicode codepoint number. That setting usually defaults to Russian glyphs).

- Must say, I do not see any political bias in this. So unless there is a source for such bias, we can not state that in the article. It is more like an issue from legacy backgrounds in handwritten Cyrillic alphabets.

- By the way, I know of one other such situation in Unicode: Eastern_Arabic_numerals#Numerals (change of language causes change of glyph). -DePiep (talk) 11:31, 14 February 2014 (UTC)

- Oops: ... but is solved differently by using separate codepoints: U+06F5 ۵ EXTENDED ARABIC-INDIC DIGIT FIVE and U+0665 ٥ ARABIC-INDIC DIGIT FIVE.

- Interestingly, Unicode does seem to allow different glyphs by culture:

- U+0415 Е CYRILLIC CAPITAL LETTER IE vs U+0404 Є CYRILLIC CAPITAL LETTER UKRAINIAN IE [2]

- Sorry, but you used a completely uncited passage, without context, in order to bolster the completely spurious claims by the OP. I appreciate a lot of things that you do, DePiep, but in this case, you are mistaken. Unicode, by its very architecture (see section 2.2 of the Unicode Standard) will never distinguish between different styles of letters unless they encode a semantic difference (eg IPA letter forms and mathematical alphabets). There is no "still"; it will never happen, because Unicode encodes plain text. The OpenType <locl> feature only needs to be present when distinguishing between locale-specific forms within a text using a single typeface. If a text uses fonts for a given locale - fonts that are, and will always be completely conformant with the standard - there is no need for OpenType <locl> support. VanIsaacWS Vexcontribs 18:29, 14 February 2014 (UTC)

- OP, me, you and others claim the very very same: Unicode does not differentiate between these Russian and Serbian forms. Apart from OP's opinions in this (the political things), that is what is stated and we agree it is correct. OP then noted that OpenType is needed, now you add that there are other ways (like a localised font); that is minor but not nullifying the point. As noted, interestingly, Unicode seems to take some leeway e.g. with Old Church Slavonic script letters in this ch7.4.

- The distinction is important, though. The erroneous presentation implies that the use of OpenType <locl> feature is the way to display locally-preferred forms, a correct presentation shows that the OpenType <locl> feature is a way to display locally-preferred forms. VanIsaacWS Vexcontribs 20:03, 14 February 2014 (UTC)

- OP, me, you and others claim the very very same: Unicode does not differentiate between these Russian and Serbian forms. Apart from OP's opinions in this (the political things), that is what is stated and we agree it is correct. OP then noted that OpenType is needed, now you add that there are other ways (like a localised font); that is minor but not nullifying the point. As noted, interestingly, Unicode seems to take some leeway e.g. with Old Church Slavonic script letters in this ch7.4.

- this edit is correct and should not have been reversed (reversed without es?). this reversal by Vanisaac of a talkpage comment is simply wikillegal. -DePiep (talk) 11:31, 14 February 2014 (UTC)

- The edit in question is poorly worded at best, and calling this particular issue

an example of the problem when Unicode support isn't enough

is a rather strong claim and thus requires a similarly strong source. Referencing some unnamedcompetitive technologies

(and why exactly can’t they be cooperative technologies?) is similarly unfortunate.

- The edit in question is poorly worded at best, and calling this particular issue

- (May I remind you that Wikipedia is not concerned with what is and what is not, – but rather with what reliable sources say and what they do not?)

- The statements at Serbian Cyrillic alphabet#Differences from other Cyrillic alphabets are not sourced (too!), and are actually somewhat misleading. For instance, it’s claimed that the italic and cursive forms of ш and п in Serbian differ to that in Russian, while I’ve seen these letters written as shown in Russian handwriting, just like the caption to that image appears to suggest (

note that all are quite acceptable in handwritten Russian cursive

.)

- The statements at Serbian Cyrillic alphabet#Differences from other Cyrillic alphabets are not sourced (too!), and are actually somewhat misleading. For instance, it’s claimed that the italic and cursive forms of ш and п in Serbian differ to that in Russian, while I’ve seen these letters written as shown in Russian handwriting, just like the caption to that image appears to suggest (

- Regarding the Ukrainian and non-Ukrainian codepoints for “ie”, please note that these glyphs differ in any typeface – not just italic or cursive. (Why, Latin “t” is also written differently in English and German cursive; cf. File:Cursive.svg to de:Datei:Ausgangsschrift der DDR 1958.png, for instance; do we really want two distinct codepoints for these “letters”?)

- On the other hand, the software I use seem to account for these differences properly. For instance, [б] Error: {{Lang}}: text has italic markup (help) in [бук] Error: {{Lang}}: text has italic markup (help) looks different to that same letter [б] Error: {{Lang}}: text has italic markup (help) in [буква.] Error: {{Lang}}: text has italic markup (help)

- As for removing the topic from the talk page, I do agree that this wasn’t particularly helpful, but I still would like to note that:

- Wikipedia is not the place to discuss the issues with Unicode (as opposed to: with the Wikipedia’s own article on Unicode);

- Wikipedia’s Neutral point of view guideline applies to talk pages just as well.

- As for removing the topic from the talk page, I do agree that this wasn’t particularly helpful, but I still would like to note that:

- That is: this topic has seen an unfortunate start, and still goes by an unfortunate heading.

- — Ivan Shmakov (d ▞ c) 15:15, 14 February 2014 (UTC)

- I removed them because they are factually wrong. There is nothing inherent in Unicode code points that limits them to a particular form. The fact that you have shitty fonts isn't a problem with Unicode, it's a problem that you are too cheap or lazy to get the right typefaces for your needs. If you want a document to display Macedonian or Serbian forms, use a font for Macedonian and Serbian. The only time that OpenType's language variants even enters into the equation is if you somehow want to display both Serbian and Russian in their preferred forms in the same document with the same font. Not only is your commentary slanderous, it is just factually wrong. You claim prejudice for a policy (no precomposed characters after v 3.0) that ensures that languages like Macedonian and Serbian can be exchanged in the open, like, say, the internet. You propogate a factually wrong image - there is no such thing as a "standard" form for any character, which even a cursory reading of the Unicode Standard would tell you. Instead, you take an ignorant screed and expect us to just sit here and take your massively, deliberately uninformed bias as some sort of gospel. Well, you are wrong. You are wrong morally, you are wrong factually, you are just plain wrong. VanIsaacWS Vexcontribs 16:21, 14 February 2014 (UTC)

- (ec):::"is poorly worded"? Well, then improve the wording.

- "is a rather strong claim" is only so when you take politics into account. Without that, and as I read it, it is just a description of a Unicode issue. Not a criminal accusation in a BLP.

- I read deviation only. Users Prosfilaes and LiliCharlie here are answering in the wrong track (explaining a wrong Unicode issue). In here there are calls of "bias" and you wrote "Whether or not Unicode has any problems with one or more languages, Wikipedia is not the place ...": ??? For me, such a fact could be part of the Unicode description in Wikipedia. And of course Vanisaac reversing talkpage content is below standard. All this is deviation from the actual content at discussion.

- The wikilink I provided does give a source. Then again: you ask for a source, and then still mean to say that "Wikipedia is not the place" for this? Clearly, for Unicode it is a topic.

- The Ukraine EI example I gave says exactly what I said. Your expansion on this does not alter that fact.

- The edit in the article did not have any of these political &tcetera angles, and on this talkpage editors could have separate simply the opinion chaff from content wheat (as I did). just improve the article. -DePiep (talk) 16:29, 14 February 2014 (UTC)

[I]t is just a description of a Unicode issue

– there is a disagreement on whether there is an issue or not. If you know about a reliable source claiming that the issue is indeed present, feel free to reference it in the article (and I guess the other one will benefit from that source, too.) If not, – I believe that this claim can wait until someone else finds such a source.

- The source given above seems to be self-published, and thus falls short of Wikipedia guidelines for reliable sources. Moreover, the source claims:

To demonstrate this, look at these letters (your browser must support UTF-8 encoding)

, and then gives the letters whose italic forms should differ between Serbian and Russian:- б г д п т

- б г д п т

- The source given above seems to be self-published, and thus falls short of Wikipedia guidelines for reliable sources. Moreover, the source claims:

- And indeed, the software I use renders the two lines above differently. (Should I provide a screenshot here?) Which I do take as an indication that there is no issue with Unicode.

- (I think I’ve fixed the heading non-neutrality issue with this edit.)

- — Ivan Shmakov (d ▞ c) 17:31, 14 February 2014 (UTC)

- Whether it is an issue? It is a fact, irrespective of whether you like it to be mentioned or not. The fact is: Unicode does not provide a mechanism to differentiate between some cyrillic characters written differently in Russian and Serbian, it takes additional material to get it going. Exactly that is what Крушевљанин Иван wrote. (Forget about the politicizing & emotions, and sure the section title here is improved; I try not to read these deviations).

- You stated that the statements were "not sourced", then I produce a source straight from that passage. Now what were you asking? Should I expect that if I produce another source here, you switch to another point? Your WP:indiscriminate link suggests you already have looked for it. This about that source: it is to show that Unicode does not cover it. However selfpublished it may be, it still proves the "not". Another source could be: all www.unicode.org: see, it is not in there. It is about proving a 'not'.

- Of course I get that the two lines you gave can show different (in your browser). That is the point proven: you need to use a non-Unicode switch. -DePiep (talk) 19:02, 14 February 2014 (UTC)

- — Ivan Shmakov (d ▞ c) 17:31, 14 February 2014 (UTC)

- My initial comment to this discussion reads:

[…] contributions are welcome if they’re […] properly sourced

. Could you please follow the link given in that sentence and tell if the source in question fits the criteria listed there?

- My initial comment to this discussion reads:

- Did you notice that the source dates back to c. 2000, BTW? Frankly, I fail to understand how could one be sure that the “facts” it documents are still relevant to the latest version of the standard released in 2013.

- I contest the “fact” as the

Cyrillic characters written differently in Russian and Serbian

do only differ in handwriting (and in typefaces purporting to imitate one; but then, handwritten forms show considerable variance among the users of even a single language.) I do agree with VanIsaac’s comment above that Unicode is concerned with encoding semantic differences (as in: Cyrillic А vs. Greek Α vs. Latin A – despite the common origin of all these letters, and considerable similarity among their usual forms), while purely presentational differences are out of scope of the standard. (I do not agree that having separate Cyrillic fonts to render different languages is anything but a crude workaround, but that’s not something to be discussed here.)

- I contest the “fact” as the

- — Ivan Shmakov (d ▞ c) 23:59, 14 February 2014 (UTC)

- Vanisaac, it turns out that throwing out words like "lazy" and "cheap" hardly help the conversation to proceed without rancor. Perhaps "standard" is not the best word, but in any international font that include italic Cyrillic, the Russian forms are going to be the ones used. They are the normal form of the glyphs, and anyone expecting another form of the glyph is going to have to deal with that. The Unicode separation of characters is a pragmatic pile of compromises, and the biggest thing to understand is that it's standardized; nobody is going to break working code to make any changes anyone wants to make to languages that have been encoded over a decade.--Prosfilaes (talk) 00:49, 15 February 2014 (UTC)

- It took me two minutes of a single google search to find the Macedonian Ministry of Information's announcement of 40 free fonts that display all of the locally preferred forms. People that don't even try, but come here and malign members of this community - there is at least one Wikipedian whom I know to have been involved with these issues - don't get my sympathy. VanIsaacWS Vexcontribs 01:07, 15 February 2014 (UTC)

- And how long did it take you to install them and get all programs to use them? In any case, people who choose to be a dick don't get my sympathy.--Prosfilaes (talk) 03:54, 15 February 2014 (UTC)

- Well, you certainly seem eager to bend over backwards to defend a new account who starts their tenure here by attacking established members of this community, so I'm not sure that gaining your support is really indicative of anything in particular. VanIsaacWS Vexcontribs 06:23, 15 February 2014 (UTC)

- "a new account who starts their tenure here by attacking established members of this community": not without diffs please, or even just one. I am astonished by the responses that editor got. Those 'established' editors you have in mind had better watch their own behaviour. -DePiep (talk) 11:32, 15 February 2014 (UTC)

- Well, you certainly seem eager to bend over backwards to defend a new account who starts their tenure here by attacking established members of this community, so I'm not sure that gaining your support is really indicative of anything in particular. VanIsaacWS Vexcontribs 06:23, 15 February 2014 (UTC)

- And how long did it take you to install them and get all programs to use them? In any case, people who choose to be a dick don't get my sympathy.--Prosfilaes (talk) 03:54, 15 February 2014 (UTC)

- It took me two minutes of a single google search to find the Macedonian Ministry of Information's announcement of 40 free fonts that display all of the locally preferred forms. People that don't even try, but come here and malign members of this community - there is at least one Wikipedian whom I know to have been involved with these issues - don't get my sympathy. VanIsaacWS Vexcontribs 01:07, 15 February 2014 (UTC)

This was an attack on people who decided several issues with the architecture of this standard back in the 90s, at least one of whom, Michael Everson, is a well-established Wikipedian, who has dedicated his life to serving minority language communities throughout the world. It has not been retracted, nor an apology issued, and another editor had to remove its inflamatory heading. It sickens me that any member of this community finds it anything but morally reprehensible to stand beside this person's actions. VanIsaacWS Vexcontribs 16:09, 15 February 2014 (UTC)

- Stop being a dick. It's stunning; people don't tend to apologize when other people engage in personal attacks on them. That any of us should take an attack on Unicode personally is ill-advised. Signed, David Starner [3].--Prosfilaes (talk) 22:18, 15 February 2014 (UTC)

- Vanisaac Stop being a dick. That diff was not personal to any editor. No editor complained. It was more you who failed to explain the backgrounds and instead started shouting unhelpful abuses. If you think you know the situation that smart, you should have explained that to the IP editor. -DePiep (talk) 08:38, 23 February 2014 (UTC)

- Stop being a dick. It's stunning; people don't tend to apologize when other people engage in personal attacks on them. That any of us should take an attack on Unicode personally is ill-advised. Signed, David Starner [3].--Prosfilaes (talk) 22:18, 15 February 2014 (UTC)

misuse of the term Unicode

I added a very helpful comment recently along the lines of "the term unicode is frequently and incorrectly used to refer to UCS-2" and some smart removed it. My comment is a good one and deserves to remain on the page. Perhaps some people should learn from the experience of people who actually have to program computers and know a lot about how the term 'unicode' is used. —Preceding unsigned comment added by 212.44.43.10 (talk) 10:04, 24 September 2008 (UTC)

- I removed the statement because it was an unsupported, subjective comment. In order to be accepted a statement such as this would need to cite an authoritative source. I am a programmer, and I think that I do know a lot about how the term 'unicode' is used, and I for one do not think that the statement that people commonly confuse Unicode with UCS-2 is correct. UCS-2 is an obsolete encoding form for Unicode, and if somebody does not know what Unicode really means they are hardly likely to know what UCS-2 is (or be able to distinguish between UCS-2 and UTF-16, which is what your comment implies). From my experience with ignorant fellow-programmers, I think that perhaps you mean that some programmers think that Unicode is more or less just 16-bit wide ASCII. BabelStone (talk) 09:04, 25 September 2008 (UTC)

- In Windows programming the term "Unicode API" almost always means an API that accepts 16-bit "characters". It may treat it as UTF-16, or as UCS-2, or in most cases it really does not do any operations that would be different depending on the encoding. The real confusion is that "Unicode API" often means "not the 8-bit API" even though the 8-bit API can accept UTF-8 encoding.Spitzak (talk) 20:44, 19 November 2010 (UTC)

- If you look at the bottom of this talk page you will find somebody who is making exactly this mistake. I think the comment should be reinserted. The use of the word "Unicode" to mean UCS-2 is very, very, common, and many people will go to this page expecting an explanation of 16-bit-per-code strings, not the glyph assignments.Spitzak (talk) 18:49, 27 September 2013 (UTC)

UCS-2 should have a separate paragraph, at least. It seems confusing to say it is not standard and then to include it with more about -8 and -16. It seems this would be clearer. — Preceding unsigned comment added by Benvhoff (talk • contribs) 07:55, 10 March 2014 (UTC)

Displayable newest Unicode with displayable newest characters

- unicode-table.com/

- teilnehmer.somee.com/WpfTextImage/Fonts.aspx — Preceding unsigned comment added by 173.254.77.145 (talk) 17:56, 7 February 2015 (UTC)

- Whatever this is intended to mean: The Unicode Consortium provides the world with charts that show representative glyphs for each of the (visible) encoded characters, including the diacritics. Please rely on the results of their decades of practice and give preference to these constantly updated charts, unless you offer very good arguments in favour of another authoritative source. LiliCharlie (talk) 19:48, 7 February 2015 (UTC)

UNICODE programming language, 1959

A programming language named UNICODE is mentioned in this January 1961 CACM cover image. Apparently it was a "hybrid of FORTRAN and MATH-MATIC" for UNIVAC computers.[4][5] --92.213.89.46 (talk) 14:09, 21 November 2015 (UTC)

External Links

The external links section is now full of self-promoting links to various Unicode code chart viewers and character picker applications of dubious quality, many of them restricted to a subset of Unicode characters (e.g. only BMP or an old version of Unicode). I think it would be a good idea to rid the external links section of such self-promoting links, and only link to sites which do give further useful information about Unicode. BabelStone (talk) 10:25, 8 January 2009 (UTC)

- I support your idea. Wikipedia is not a link repository. — Emil J. 10:56, 8 January 2009 (UTC)

- At a minimum I suggest removing YChartUnicode and Table of Unicode characters from 1 to 65535 (including 64 symbols per page and 100 symbols per page), as these provide little or no useful information and are limited to the BMP. libUniCode-plus is a software library that seems to me to be of peripheral relevance and could also be removed (probably a link to ICU would be much more useful). Ishida's UniView supports 5.1, so I think it can stay, and although DecodeUnicode is only 5.0, it is probably still a good link to keep. BabelStone (talk) 13:47, 9 January 2009 (UTC)

I have written a short overview of UNICODE. I have tried to be short and crisp. Please add this in external links so that more people can use it. Short and Crisp Overview of UNICODE —Preceding unsigned comment added by Skj.saurabh (talk • contribs) 15:26, 5 March 2010 (UTC)

- Please do not try to use Wikipedia to promote your personal website. Your page is not appropriate to link to from the article as it does not provide any information which is not already in the article or available directly from the Unicode website. Please read the policy on external links at WP:ELNO. BabelStone (talk) 09:53, 11 March 2010 (UTC)

In reply to this I will like to say that I have not opened a tutorial site. Therefore I do not need to publicize my site. We use this site internally for our company. We have made it publicly available since we thought that some of the resources on the net were not crisp or in-depth. I sincerely think that our article gives a better introduction on UNICODE that UNICODE site as well as Wikipedia. If you say that all information is there on these two sites then there is no need of Wikipedia also since all information on UNICODE is there on UNICODE site. Also Wikipedia page is more complex. A casual viewer will find it very technical and confusing. I found it and then had to consult many sources in order to right the page for myself. If you do not think it adds value I do not have any problem but I think people would have found our article useful. —Preceding unsigned comment added by 115.240.54.153 (talk) 14:48, 11 March 2010 (UTC)

- A site can be considered promoted by the mere mention of a site. Also, the Indian English dialect can be harder to understand by speakers of other dialects of English. Hackwrench (talk) 18:37, 3 February 2016 (UTC)

ভাল অভ্যাস গড়ুন

গবেষণা থেকে জানা গেছে যে, শরীবর এবং মনের জন্য ইতিবাচক চিন্তাভাবনা হচ্ছে জরুরি। তাই মনের কারখানায় শুধুই ইতিবাচক চিন্তাভানা তৈরী করুন। ভাল বই পড়ুন। ইন্টারনেটে ভালভাল সাইটের সাথে থাকুন। বন্ধুদের মাঝে খারাপ বন্ধু থাকলে তাদের ছাটাই করুন। ধর্মীয় আত্ম-উন্নয়নের বইগুলো হচ্ছে ভাল বই। এগুলো নিয়মিত পড়ুন। ভাল সাথী হচ্ছে সে, যে বেশিরভাগ সময় উৎফুল্ল থাকে। জীবনে আলোর দিকটা তার নজরে পড়ে। এরা আপনার হৃদয়কে সারাজীবন আলোড়ীত করবে। তাই এদের সঙ্গ কখরো ত্যাগ করবেন না। খারাপ বন্ধু তা যতোই কাছের হোক না কেন, ত্যাগ করুন। নাহলে খারাপ চিন্তা আপনাকে আক্রান্ত করবে। মনে রাখবেন, ভাল চিন্তার চেয়ে খারাপ চিন্তাই মানুষকে বেশি আকর্ষন করে। —Preceding unsigned comment added by Monitobd (talk • contribs) 12:34, 13 January 2010 (UTC)

- This isn't Devanagari (Hindi). I used script recognition software to find out what language this is, and apparently it's "Bishnupriya Manipuri". Can anyone read it? I searched everywhere, and there's not a single online translator. Should I just ignore it... Indigochild 01:42, 12 April 2010 (UTC)

It is bengali(Bangla,india). — Preceding unsigned comment added by 112.133.214.254 (talk) 06:02, 2 January 2013 (UTC)

- You can probably just ignore it, as it seems to be spam. Here's what Google Translate gives: “Build good habits [edit] // Research has shown that positive thinking is important for saribara and mind. Just make positive ideas of the factory. Read a good book. Keep up with the best dressed. If you prune them in the midst of his worst friend. Religious self-development books are good books. They read regularly. He is a good fellow, is delighted that most of the time. He noticed the light side of life. They will alorita your heart forever. Therefore, do not leave the company kakharo. No matter how close a friend, whatever it is bad, leave. Otherwise, the bad thoughts will affect you. Remember, good thoughts attract more people than the bad thoughts.” 184.148.205.59 (talk) 21:22, 19 February 2016 (UTC)

How to enter unicode characters

This article completely fails in explaining to average people how to enter a special letter with an unicode code. There are countless lists of codes, but none explains how to enter the code to insert the desired leter into a text. —Preceding unsigned comment added by 95.88.121.194 (talk) 14:12, 21 April 2009 (UTC)

- That is not the purpose of this page. Please see the Unicode input article, which is linked to from this article. BabelStone (talk) 15:12, 21 April 2009 (UTC)

- i agree with the initial complainer and came here to make the same gripe. will check your input thing there. Cramyourspam (talk) 06:25, 26 September 2012 (UTC)

- To average people? I am guessing you mean to an average English speaking American using a Windows PC and keyboard. A short answer is: either copy-and-paste it, or you can try using the Numeric Keypad along with the <Alt> key. <Alt>+132 → ä but <Alt>+0132 → „ (on my Windows 10 PC). (look up the Unicode codepoint you want to enter, if its expressed in hexadecimal, convert to decimal, and enter it while holding down the <alt> key.) There is no guarantee that either will work with your device. I am not aware of any word-processing program which is capable of rendering ALL 120,000 defined codepoints; Microsoft Word 2016 certainly doesn't. This is an extremely difficult proposition. First because the number of codepoints is changing frequently and second because certain governments (can you say mainland China?) reserve the right to add or change codepoints, and third because a codepoint may alter the rendering of one OR MORE following or preceeding characters (including WHERE the entries are added!). There are far more things on Heaven and Earth, Horatio...Abitslow (talk) 21:06, 3 March 2016 (UTC)

Another criticism of the lede

Unicode Consortium goes to great pains to distinguish SOME codepoints which have different semantics but identical rendering/representation. This article fails to clarify that. I think what a code-point is should appear in the lede, as well as the failure of some older code-points to be unambiguous. I also think that the fact that in many cases, character representation may be done using several different code-points should be mentioned, as well as the fact that some errors have been 'locked in' because of the need for, and promise of, stablity. Just my two cents. I guess I'm saying that the lede and introduction need a complete rewrite to make them more palatable to the general public who don't know the difference between a glyph, character, or symbol, nor what font and rendering have to do with it.Abitslow (talk) 19:11, 9 March 2016 (UTC)

- Maybe the topic of different semantics despite ±identical representations deserves an entire section of its own, as such characters have lead to severe security concerns. For example, a fake URL https://Μісrоsоft.com with a mix of Latin, Greek and Cyrillic letters has to be prevented from being registered, as it might be visually indistinguishable from the all-Latin https://Microsoft.com. Love —LiliCharlie (talk) 19:40, 9 March 2016 (UTC)

vandalism?

the edit on 20:52, 21 May 2010 by 188.249.3.139 shouldn't be reverted? —Preceding unsigned comment added by 193.226.6.227 (talk)

Recent changes list

- In Unicode pages & talkpages (source)

- In ISO 15924 pages & talkpages (source)

For your userpage:

- Updated. We could use a tempalte that marks Unicode articles. -DePiep (talk) 23:43, 25 May 2016 (UTC)

Writing Systems still unable to viewed properly in Unicode

As of September 2016, however, Unicode is unable to properly display the fonts by default for the following unicode writing systems on most browsers (namely, Microsoft Edge, Internet Explorer, Google Chrome and Mozilla Firefox):

- Balinese alphabet (ᬅᬓ᭄ᬱᬭᬩᬮᬶ)

- Batak alphabet (ᯘᯮᯮᯒᯖ᯲ ᯅᯖᯂ᯲, also used for the Karo, Simalungun, Pakpak and Angkola-Mandailing languages)

- Baybayin script (ᜊᜌ᜔ᜊᜌᜒᜈ᜔)

- Chakma script (𑄇𑄳𑄡𑄈𑄳𑄡 𑄉𑄳𑄡)

- Hanunó'o alphabet (ᜱᜨᜳᜨᜳᜢ)

- Limbu script (ᤔᤠᤱᤜᤢᤵ)

- Pollard script (𖼀𖼁𖼂𖼃𖼄𖼅𖼆𖼇)

- Saurashtra script (ꢱꣃꢬꢯ꣄ꢡ꣄ꢬ)

- Sharada script (𑆐𑆑𑆒𑆓𑆔𑆕𑆖𑆗𑆘)

- Sundanese script (ᮃᮊ᮪ᮞᮛ ᮞᮥᮔ᮪ᮓ)

- Sylheti Nagari (ꠍꠤꠟꠐꠤ ꠘꠣꠉꠞꠤ)

- Tai Tham alphabet (ᨲ᩠ᩅᩫᨾᩮᩥᩬᨦ)

Prior to Windows 7, scripts such Burmese (မြန်မာဘာသာ), Khmer (ភាសាខ្មែរ), Lontara (ᨒᨚᨈᨑ), Cherokee (ᎠᏂᏴᏫᏯ), Coptic (ϯⲙⲉⲧⲣⲉⲙⲛ̀ⲭⲏⲙⲓ), Glagolitic (Ⰳⰾⰰⰳⱁⰾⰻⱌⰰ), Gothic (𐌲𐌿𐍄𐌹𐍃𐌺), Cunneiform (𐎨𐎡𐏁𐎱𐎡𐏁), Phags-pa (ꡖꡍꡂꡛ ꡌ), Traditional Mongolian (ᠮᠣᠨᠭᠭᠣᠯ ), Tibetan (ལྷ་སའི་སྐད་), Odia alphabet (ଓଡ଼ିଆ ) also had this font display issue but have since been resolved (ie. can now be 'seen' on most browsers).

Could someone also enable these fonts to be visible on Wikipedia browsers? --Sechlainn (talk) 02:23, 29 September 2016 (UTC)

- I don't know what you mean. Unicode is the underlying standard that makes it possible to use those scripts at all. Properly showing the texts is a matter of operating system, fonts and web browser. Even just OS and browser isn't good enough; what language packs and fonts are installed are important. There's nothing that anyone can in general do here.--Prosfilaes (talk) 02:49, 29 September 2016 (UTC)

- @Sechlainn: 1. Please do not engage in original research. — 2. Unicode is not intended to “display the fonts.” — 3. These are Unicode scripts, not writing systems. — 4. I can view all of the above except Sharada on my Firefox. — 5. There is no such thing as “Wikipedia browsers.” Love —LiliCharlie (talk) 03:02, 29 September 2016 (UTC)

Unicode 10.0

This version has just been released today, can you add information for this into the article? Proof from Emojipedia 86.22.8.235 (talk) 12:03, 20 June 2017 (UTC)

- I haven't seen anything on the Unicode site (http://www.unicode.org/) but will keep an eye out for an official announcement that 10.0 has been released. DRMcCreedy (talk) 18:01, 20 June 2017 (UTC)

- Version 10.0 now shows up as the latest version at http://www.unicode.org/standard/standard.html DRMcCreedy (talk) 18:44, 20 June 2017 (UTC)

- And the data files have been updated, so I think we can start updating Wikipedia now. BabelStone (talk) 19:23, 20 June 2017 (UTC)

- Version 10.0 now shows up as the latest version at http://www.unicode.org/standard/standard.html DRMcCreedy (talk) 18:44, 20 June 2017 (UTC)

"Presentation forms"

Can someone explain to me what a "presentation form" is? I can't find an answer anywhere. Pariah24 (talk) 11:19, 10 September 2017 (UTC)

Is there a unicode symbol for "still mode"?

I mean this symbol: https://www.iso.org/obp/ui#iec:grs:60417:5554 Seelentau (talk) 18:16, 12 January 2018 (UTC)

- It seems not. BabelStone (talk) 19:03, 12 January 2018 (UTC)

Writing Systems still unable to viewed properly in Unicode

As of September 2016, however, Unicode is unable to properly display the fonts by default for the following unicode writing systems on most browsers (namely, Microsoft Edge, Internet Explorer, Google Chrome and Mozilla Firefox):

- Balinese alphabet (ᬅᬓ᭄ᬱᬭᬩᬮᬶ)

- Batak alphabet (ᯘᯮᯮᯒᯖ᯲ ᯅᯖᯂ᯲, also used for the Karo, Simalungun, Pakpak and Angkola-Mandailing languages)

- Baybayin script (ᜊᜌ᜔ᜊᜌᜒᜈ᜔)

- Chakma script (𑄇𑄳𑄡𑄈𑄳𑄡 𑄉𑄳𑄡)

- Hanunó'o alphabet (ᜱᜨᜳᜨᜳᜢ)

- Limbu script (ᤔᤠᤱᤜᤢᤵ)

- Pollard script (𖼀𖼁𖼂𖼃𖼄𖼅𖼆𖼇)

- Saurashtra script (ꢱꣃꢬꢯ꣄ꢡ꣄ꢬ)

- Sharada script (𑆐𑆑𑆒𑆓𑆔𑆕𑆖𑆗𑆘)

- Sundanese script (ᮃᮊ᮪ᮞᮛ ᮞᮥᮔ᮪ᮓ)

- Sylheti Nagari (ꠍꠤꠟꠐꠤ ꠘꠣꠉꠞꠤ)

- Tai Tham alphabet (ᨲ᩠ᩅᩫᨾᩮᩥᩬᨦ)

Prior to Windows 7, scripts such Burmese (မြန်မာဘာသာ), Khmer (ភាសាខ្មែរ), Lontara (ᨒᨚᨈᨑ), Cherokee (ᎠᏂᏴᏫᏯ), Coptic (ϯⲙⲉⲧⲣⲉⲙⲛ̀ⲭⲏⲙⲓ), Glagolitic (Ⰳⰾⰰⰳⱁⰾⰻⱌⰰ), Gothic (𐌲𐌿𐍄𐌹𐍃𐌺), Cunneiform (𐎨𐎡𐏁𐎱𐎡𐏁), Phags-pa (ꡖꡍꡂꡛ ꡌ), Traditional Mongolian (ᠮᠣᠨᠭᠭᠣᠯ ), Tibetan (ལྷ་སའི་སྐད་), Odia alphabet (ଓଡ଼ିଆ ) also had this font display issue but have since been resolved (ie. can now be 'seen' on most browsers).

Could someone also enable these fonts to be visible on Wikipedia browsers? --Sechlainn (talk) 02:23, 29 September 2016 (UTC)

- I don't know what you mean. Unicode is the underlying standard that makes it possible to use those scripts at all. Properly showing the texts is a matter of operating system, fonts and web browser. Even just OS and browser isn't good enough; what language packs and fonts are installed are important. There's nothing that anyone can in general do here.--Prosfilaes (talk) 02:49, 29 September 2016 (UTC)

- @Sechlainn: 1. Please do not engage in original research. — 2. Unicode is not intended to “display the fonts.” — 3. These are Unicode scripts, not writing systems. — 4. I can view all of the above except Sharada on my Firefox. — 5. There is no such thing as “Wikipedia browsers.” Love —LiliCharlie (talk) 03:02, 29 September 2016 (UTC)

Two things this STILL does poorly

First it still reads like a technical manual written by experts for experts. It still refuses to explain, upfront, what a codepoint is. The related concepts of character, glyph, as well as the fonts involved all need to be discussed, imho. It should be made clear in the lead that Unicode has numerous failures: it is unable to correct past mistakes, and is (and will almost certainly continue to be) limited by political pressure (including by sovereign states such as China and N. Korea). Some of what is in the Unicode standard is there due to political concession, and of course all of it is there due to decisions made by committee(s). That's one thing. The other is the articles virtually complete failure to tackle the Windows operating system, which is far-and-away the dominant OS in the world. Windows does not handle Unicode. In order for an application, be it a web browser or a spell-checker or a chat app, to handle Unicode, it has to work around the Windows character tables. (Of course, if the article doesn't explain what the difference is between a codepoint and a character (or "wide-character"), then you've failed before you begin. I think, and propose, that at the LEAST, a section under "Issues" should be created to simply state that despite Microsoft's continued deceptive and misleading claims about its support for Unicode, that it and its Windows OS, does not directly support Unicode. (Microsoft's Word has impressive support, but still contains large omissions of the 136,000 codepoints.)75.90.36.201 (talk) 20:22, 9 April 2018 (UTC)

- Bizarre and totally incorrect statement about Microsoft Windows not directly supporting Unicode. Of course Windows (excluding obsolete W95, W98 and ME) directly and natively supports Unicode, and no Unicode-aware application running on Windows needs to "work around the Windows character tables". BabelStone (talk) 10:26, 10 April 2018 (UTC)

- Windows does not support UTF-8 or any other coverage of Unicode in the 8-bit api, which means standard functions to open or list files do not work for filenames with Unicode in them. This makes it impossible to write portable software using the standard functions that works with Unicode filenames, therefore Windows does not support Unicode.Spitzak (talk) 21:34, 10 April 2018 (UTC)

- Does C# even support that "8-bit API"? What do you mean by "portable software"? I would note the POSIX standard doesn't support Unicode in file names either; only A-Za-z0-9, hyphen, period and underscore can be used in portable POSIX filenames. And non-POSIX MacOS/Plan 9/BeOS programs aren't portable, so I believe it is impossible to write portable software using Unicode.--Prosfilaes (talk) 23:18, 10 April 2018 (UTC)

- By "portable" I mean "source code that works on more than one platform", stop trying to redefine it as "every computer ever invented in history". Modern C/C++ compilers will preserve the 8-bit values in quoted strings and thus preserve UTF-8. Only VC++ is broken here, though you can outwit it by claiming that the source code is *not* Unicode (???!). POSIX allows all byte values other than '/' and null in a filename and thus allows UTF-8. POSIX does go way off course when discussing shell quoting syntax and you are right it disallows some byte values.Spitzak (talk) 01:32, 11 April 2018 (UTC)

- Oh and OS/X works exactly as I have stated, it in fact has some of the best Unicode support, though their insistence on normalizing the filenames rather than just preserving the byte sequence is a bit problematic. But at least all the software knows the filenames are UTF-8.Spitzak (talk) 01:34, 11 April 2018 (UTC)

- What do you mean by more than one platform? I have no reason to believe that C# doesn't support Unicode filenames, and thus every version of Windows NT since 4.0 (and thus every version of Windows since XP) supports portable code using Unicode filenames. If any non-POSIX MacOS X program is "portable", then so is a C# program targeting NET 1.0.

- POSIX does not allow "all byte values other than '/' and null in a filename"; to quote David Wheeler here, https://www.dwheeler.com/essays/fixing-unix-linux-filenames.html says

- For a filename to be portable across implementations conforming to POSIX.1-2008, it shall consist only of the portable filename character set as defined in Portable Filename Character Set. Portable filenames shall not have the <hyphen> character as the first character since this may cause problems when filenames are passed as command line arguments.

- I then examined the Portable Filename Character Set, defined in 3.276 (“Portable Filename Character Set”); this turns out to be just A-Z, a-z, 0-9, <period>, <underscore>, and <hyphen> (aka the dash character). So it’s perfectly okay for a POSIX system to reject a non-portable filename due to it having “odd” characters or a leading hyphen.

- If strictly following IEEE 1003, the only major operating system standard, is important to you, filenames shall come only from that set of 65 characters. In practice it's better, but a program strictly conforming to the standard is so limited.

- So as far as I can tell, Windows is in the same boat as everyone else.--Prosfilaes (talk) 22:30, 11 April 2018 (UTC)

- You are continuing to insist that "portable" means "it works exactly the same on every single computer ever made", while I am going by the more popular definiton of "it works on more than one computer". If you insist on such silly impossible requirements it is obvious you are refusing to admit you are wrong.Spitzak (talk) 00:55, 12 April 2018 (UTC)

- Portable, as strictly conforming to the POSIX standard, would be nice. Portable, as in running on multiple operating systems, is more realistic. Portable, as in running on multiple versions of the same OS, is barely passable. "It works on more than one computer" is not the "more popular" definition, as short of being tied into specialized one-off hardware like Deep Blue, you can always image the the drive and load it into an emulator on another system.--Prosfilaes (talk) 19:08, 12 April 2018 (UTC)

- You are continuing to insist that "portable" means "it works exactly the same on every single computer ever made", while I am going by the more popular definiton of "it works on more than one computer". If you insist on such silly impossible requirements it is obvious you are refusing to admit you are wrong.Spitzak (talk) 00:55, 12 April 2018 (UTC)

- Does C# even support that "8-bit API"? What do you mean by "portable software"? I would note the POSIX standard doesn't support Unicode in file names either; only A-Za-z0-9, hyphen, period and underscore can be used in portable POSIX filenames. And non-POSIX MacOS/Plan 9/BeOS programs aren't portable, so I believe it is impossible to write portable software using Unicode.--Prosfilaes (talk) 23:18, 10 April 2018 (UTC)

- Windows does not support UTF-8 or any other coverage of Unicode in the 8-bit api, which means standard functions to open or list files do not work for filenames with Unicode in them. This makes it impossible to write portable software using the standard functions that works with Unicode filenames, therefore Windows does not support Unicode.Spitzak (talk) 21:34, 10 April 2018 (UTC)

- I think you have a point about the way we mention codepoints in the opening.

- As for numerous failures, "Unicode is a computing industry standard for the consistent encoding, representation, and handling of text expressed in most of the world's writing systems." If you understand what that says, it tells you that it this is a committee project that pays a price for backward compatibility and works with the user community. To compare and contrast, the TRON character encoding doesn't work with sovereign states like China; devoid of such political pressure, it doesn't support Zhuang or Cantonese written in Han characters. Without multinational committees and political pressure, its support of anything that's not Japanese is half-assed and generally copied from Unicode.

- There are computer projects that work on the benevolent dictator standard, like the Linux kernel and Python. But I don't know of any that don't center around one chunk of source code, that involve an abstract standard with multiple equal implementations. If you have a seriously complex project, like encoding all of human writing, and it's going to be core for Microsoft and Apple and Google and Oracle, it's going to be a committee that responds to political needs. And standards are really interesting only if Microsoft and Apple and Google and Oracle care; stuff like Dart and C# may technically be standards, but users use the Google tools for Dart and follow what Google puts out, and likewise for Microsoft and C#. (Or SQL, where there is a standard with multiple implementations, but one still has to learn MSSQL and Oracle Database and MySQL separately. I don't if that's an under-specified standard, or companies just ignoring it, but certainly the solution is not listening to the companies less.)--Prosfilaes (talk) 23:18, 10 April 2018 (UTC)

- fopen("stringWithUnicodeInIt.æ") does not do what anybody wants on Windows. On Linux it works. Therefore support for Unicode is better on Linux than Windows, which is not very impressive for Windows...Spitzak (talk) 01:25, 11 April 2018 (UTC)

- On Linux it may work; but "filenames" in Linux aren't names, they aren't strings, they're arbitrary byte-sequences that don't include 00h or 2Fh, and the most reasonable interpretation of the filename as a string may require choosing a character set on a per-filename basis; in worst-case scenarios, say a user in locale zh-TW mass renamed a bunch of files to start with 檔案 (archive), without paying attention to the fact they were named by a user in locale fr-FR.ISO8859-1 ("archivé"), you can end up with a byte string that makes no sense under any single character set.

- To put it shorter, that may work in Linux, but it may also fail to open a file with a user-visible name of "stringWithUnicodeInIt.æ", depending on locale settings.

- Not to mention that judging Windows by C alone is unfair and silly; why not C# or Python or other languages?--Prosfilaes (talk) 22:30, 11 April 2018 (UTC)

- Because C# api is a Microsoft developement and they wrote the Linux version, and thus any failings are their fault.Spitzak (talk) 17:07, 13 April 2018 (UTC)