Mauchly's sphericity test

Mauchly's sphericity test or Mauchly's W is a statistical test used to validate a repeated measures analysis of variance (ANOVA). It was developed in 1940 by John Mauchly.

Sphericity[edit]

Sphericity is an important assumption of a repeated-measures ANOVA. It is the condition of equal variances among the differences between all possible pairs of within-subject conditions (i.e., levels of the independent variable). If sphericity is violated (i.e., if the variances of the differences between all combinations of the conditions are not equal), then the variance calculations may be distorted, which would result in an inflated F-ratio.[1] Sphericity can be evaluated when there are three or more levels of a repeated measure factor and, with each additional repeated measures factor, the risk for violating sphericity increases. If sphericity is violated, a decision must be made as to whether a univariate or multivariate analysis is selected. If a univariate method is selected, the repeated-measures ANOVA must be appropriately corrected depending on the degree to which sphericity has been violated.[2]

Measurement of sphericity[edit]

| Patient | Tx A | Tx B | Tx C | Tx A − Tx B | Tx A − Tx C | Tx B − Tx C |

|---|---|---|---|---|---|---|

| 1 | 30 | 27 | 20 | 3 | 10 | 7 |

| 2 | 35 | 30 | 28 | 5 | 7 | 2 |

| 3 | 25 | 30 | 20 | −5 | 5 | 10 |

| 4 | 15 | 15 | 12 | 0 | 3 | 3 |

| 5 | 9 | 12 | 7 | −3 | 2 | 5 |

| Variance: | 17 | 10.3 | 10.3 | |||

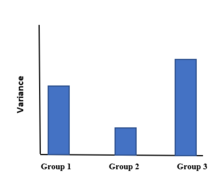

To further illustrate the concept of sphericity, consider a matrix representing data from patients who receive three different types of drug treatments in Figure 1. Their outcomes are represented on the left-hand side of the matrix, while differences between the outcomes for each treatment are represented on the right-hand side. After obtaining the difference scores for all possible pairs of groups, the variances of each group difference can be contrasted. From the example in Figure 1, the variance of the differences between Treatment A and B (17) appear to be much greater than the variance of the differences between Treatment A and C (10.3) and between Treatment B and C (10.3). This suggests that the data may violate the assumption of sphericity. To determine whether statistically significant differences exist between the variances of the differences, Mauchly's test of sphericity can be performed.

Interpretation[edit]

Developed in 1940 by John W. Mauchly,[3] Mauchly's test of sphericity is a popular test to evaluate whether the sphericity assumption has been violated. The null hypothesis of sphericity and alternative hypothesis of non-sphericity in the above example can be mathematically written in terms of difference scores.

Interpreting Mauchly's test is fairly straightforward. When the probability of Mauchly's test statistic is greater than or equal to (i.e., p > , with commonly being set to .05), we fail to reject the null hypothesis that the variances are equal. Therefore, we could conclude that the assumption has not been violated. However, when the probability of Mauchly's test statistic is less than or equal to (i.e., p < ), sphericity cannot be assumed and we would therefore conclude that there are significant differences between the variances of the differences.[4] Sphericity is always met for two levels of a repeated measure factor and is, therefore, unnecessary to evaluate.[1]

Statistical software should not provide output for a test of sphericity for two levels of a repeated measure factor; however, some versions of SPSS produce an output table with degrees of freedom equal to 0, and a period in place of a numeric p value.

Violations of sphericity[edit]

When sphericity has been established, the F-ratio is valid and therefore interpretable. However, if Mauchly's test is significant then the F-ratios produced must be interpreted with caution as the violations of this assumption can result in an increase in the Type I error rate, and influence the conclusions drawn from your analysis.[4] In instances where Mauchly's test is significant, modifications need to be made to the degrees of freedom so that a valid F-ratio can be obtained.

In SPSS, three corrections are generated: the Greenhouse–Geisser correction (1959), the Huynh–Feldt correction (1976), and the lower-bound. Each of these corrections have been developed to alter the degrees of freedom and produce an F-ratio where the Type I error rate is reduced. The actual F-ratio does not change as a result of applying the corrections; only the degrees of freedom.[4]

The test statistic for these estimates is denoted by epsilon (ε) and can be found on Mauchly's test output in SPSS. Epsilon provides a measure of departure from sphericity. By evaluating epsilon, we can determine the degree to which sphericity has been violated. If the variances of differences between all possible pairs of groups are equal and sphericity is exactly met, then epsilon will be exactly 1, indicating no departure from sphericity. If the variances of differences between all possible pairs of groups are unequal and sphericity is violated, epsilon will be below 1. The further epsilon is from 1, the worse the violation.[5]

Of the three corrections, Huynh-Feldt is considered the least conservative, while Greenhouse–Geisser is considered more conservative and the lower-bound correction is the most conservative. When epsilon is > .75, the Greenhouse–Geisser correction is believed to be too conservative, and would result in incorrectly rejecting the null hypothesis that sphericity holds. Collier and colleagues[6] showed this was true when epsilon was extended to as high as .90. The Huynh–Feldt correction, however, is believed to be too liberal and overestimates sphericity. This would result in incorrectly rejecting the alternative hypothesis that sphericity does not hold, when it does.[7] Girden[8] recommended a solution to this problem: when epsilon is > .75, the Huynh–Feldt correction should be applied and when epsilon is < .75 or nothing is known about sphericity, the Greenhouse–Geisser correction should be applied.

Another alternative procedure is using the multivariate test statistics (MANOVA) since they do not require the assumption of sphericity.[9] However, this procedure can be less powerful than using a repeated measures ANOVA, especially when sphericity violation is not large or sample sizes are small.[10] O’Brien and Kaiser[11] suggested that when you have a large violation of sphericity (i.e., epsilon < .70) and your sample size is greater than k + 10 (i.e., the number of levels of the repeated measures factor + 10), then a MANOVA is more powerful; in other cases, repeated measures design should be selected.[5] Additionally, the power of MANOVA is contingent upon the correlations between the dependent variables, so the relationship between the different conditions must also be considered.[2]

SPSS provides an F-ratio from four different methods: Pillai's trace, Wilks’ lambda, Hotelling's trace, and Roy's largest root. In general, Wilks’ lambda has been recommended as the most appropriate multivariate test statistic to use.

Criticisms[edit]

While Mauchly's test is one of the most commonly used to evaluate sphericity, the test fails to detect departures from sphericity in small samples and over-detects departures from sphericity in large samples. Consequently, the sample size has an influence on the interpretation of the results.[4] In practice, the assumption of sphericity is extremely unlikely to be exactly met so it is prudent to correct for a possible violation without actually testing for a violation.

References[edit]

- ^ a b Hinton, P. R., Brownlow, C., & McMurray, I. (2004). SPSS Explained. Routledge.

{{cite book}}: CS1 maint: multiple names: authors list (link) - ^ a b Field, A. P. (2005). Discovering Statistics Using SPSS. Sage Publications.

- ^ Mauchly, J. W. (1940). "Significance Test for Sphericity of a Normal n-Variate Distribution". The Annals of Mathematical Statistics. 11 (2): 204–209. doi:10.1214/aoms/1177731915. JSTOR 2235878.

- ^ a b c d "Sphericity". Laerd Statistics.

- ^ a b "Sphericity in Repeated Measures Analysis of Variance" (PDF).

- ^ Collier, R. O., Jr., Baker, F. B., Mandeville, G. K., & Hayes, T. F. (1967). "Estimates of test size for several test procedures based on conventional variance ratios in the repeated measures design". Psychometrika. 32 (3): 339–353. doi:10.1007/bf02289596. PMID 5234710. S2CID 42325937.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ^ Maxwell, S.E. & Delaney, H.D. (1990). Designing experiments and analyzing data: A model comparison perspective. Belmont: Wadsworth.

- ^ Girden, E. (1992). ANOVA: Repeated measures. Newbury Park, CA: Sage.

- ^ Howell, D. C. (2009). Statistical Methods for Psychology. Wadsworth Publishing.

- ^ "Mauchly Test" (PDF). Archived from the original (PDF) on 2013-05-11. Retrieved 2012-04-29.

- ^ O'Brien, R. G. & Kaiser, M. K. (1985). "The MANOVA approach for analyzing repeated measures designs: An extensive primer". Psychological Bulletin. 97: 316–333. doi:10.1037/0033-2909.97.2.316.

Further reading[edit]

- Girden, E. R. (1992). ANOVA: repeated measures. Newbury Park, CA: Sage.

- Greenhouse, S. W., & Geisser, S. (1959). "On methods in the analysis of profile data." Psychometrika, 24, 95–112.

- Huynh, H., & Feldt, L. S. (1976). "Estimation of the Box correction for degrees of freedom from sample data in randomised block and split-plot designs." Journal of Educational Statistics, 1, 69–82.

- Mauchly, J. W. (1940). "Significance test for sphericity of a normal n-variate distribution." The Annals of Mathematical Statistics, 11, 204–209.